Hugging Face Alternatives: Leading Open-Source ML Model Platforms

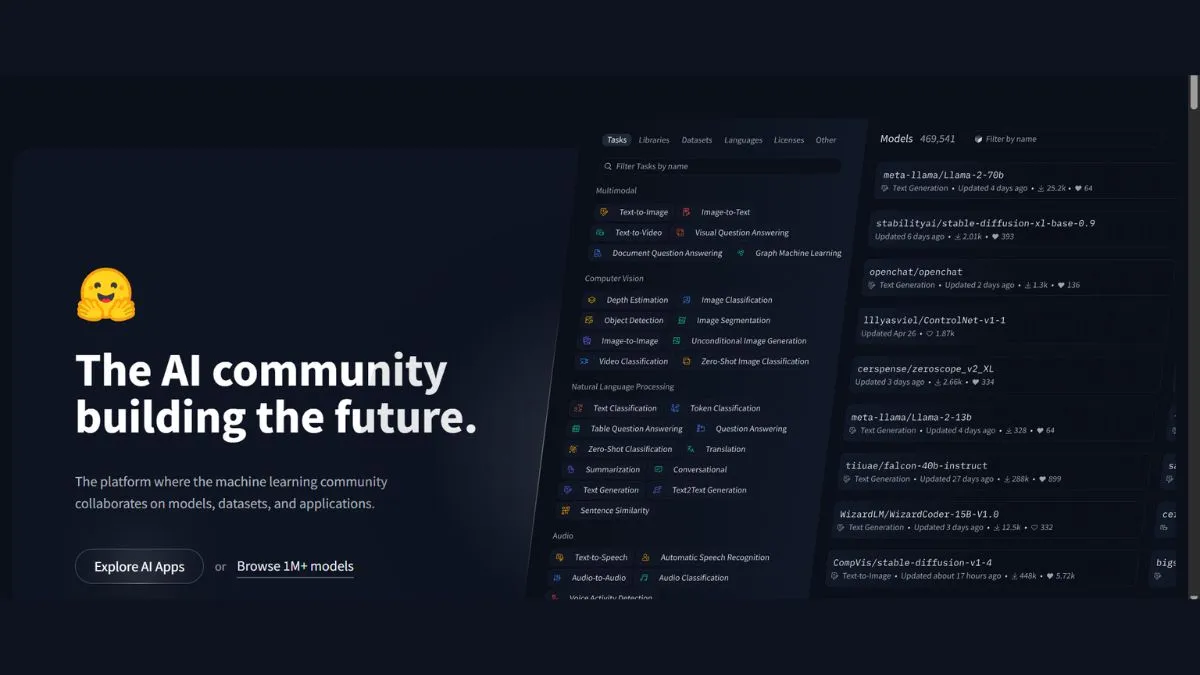

The machine learning landscape has evolved dramatically, with developers constantly seeking the best platforms to build, train, and deploy AI models. While Hugging Face has established itself as a prominent player in the AI community, numerous alternatives offer unique features, specialized capabilities, and different approaches to machine learning workflows. Whether you're looking for enterprise-grade solutions, research-focused frameworks, or production-ready deployment tools, understanding your options is crucial for making informed decisions.

Why Consider Hugging Face Alternatives?

Hugging Face has earned its reputation through community-driven model sharing and open-source developments, but specific project requirements might call for different solutions. Organizations may need tighter security controls, enterprise-level support, specialized infrastructure, or custom workflows that other platforms handle more effectively.

The decision between frameworks isn't just technical—it's strategic. Your choice affects scalability, performance, security, budget, and long-term maintenance for years to come. Some teams require the flexibility of open-source solutions, while others benefit from fully managed, enterprise-grade platforms with dedicated support.

Top Hugging Face Alternatives

1. TensorFlow: Google's Production-Ready Powerhouse

Developed by Google Brain and released in 2015, TensorFlow was designed to handle scalable, production-grade machine learning workflows. This comprehensive open-source platform covers the entire machine learning lifecycle from development to deployment.

Key Features:

- Scalable Architecture: Handles massive production workloads with distributed training capabilities

- TensorFlow Serving: Battle-tested system for high-throughput, low-latency inference in production environments

- TensorFlow Lite: Industry standard for deploying optimized models on mobile and edge devices

- TPU Support: Native optimization for Google's Tensor Processing Units

- Comprehensive Ecosystem: Includes TensorBoard for visualization, TFX for production pipelines, and extensive tooling

Best For:

- Enterprise-scale applications requiring robust deployment infrastructure

- Mobile and edge AI implementations

- Organizations already invested in Google Cloud ecosystem

- Teams prioritizing production stability over research flexibility

Limitations:

- Steeper learning curve compared to more recent frameworks

- More complex setup for experimental research

- Can feel less intuitive for rapid prototyping

Learn More: Explore AI tools that can enhance your workflow and discover how to integrate them effectively.

2. PyTorch: The Research Community's Favorite

PyTorch's design is centered around flexibility and user-friendliness, with its dynamic computation graph allowing developers to change model behavior on the fly. Originally launched by Facebook's AI Research lab in 2016, PyTorch has become the dominant framework in academia and research.

Key Features:

- Dynamic Computation Graphs: Modify and debug models in real-time with eager execution

- Pythonic Interface: Clean, intuitive API that feels natural to Python developers

- TorchScript: Enables conversion to static graphs for production deployment

- ONNX Support: Excellent inter-framework compatibility

- Strong Research Community: PyTorch dominates in research, claiming over 55% of the production share in Q3 2025

Best For:

- Research and prototyping where rapid iteration is essential

- NLP and generative models (GPT, Llama, Stable Diffusion)

- Teams that prioritize code readability and debugging ease

- Academic projects and experimental architectures

Limitations:

- Historically weaker production deployment tools (though improving)

- Smaller enterprise ecosystem compared to TensorFlow

- Less optimized for mobile deployment

Performance Insights: Check out our guide on AI tools for coding to see how PyTorch integrates with modern development workflows.

3. Amazon SageMaker: AWS's End-to-End ML Platform

Amazon SageMaker provides a fully managed machine learning service that simplifies building, training, and deploying models at scale. It integrates seamlessly with AWS infrastructure and offers comprehensive MLOps capabilities.

Key Features:

- Unified ML Platform: Combines data processing, model development, and deployment

- AutoML Capabilities: Automated model selection and hyperparameter tuning

- Built-in Algorithms: Pre-configured algorithms optimized for AWS infrastructure

- Data Integration: Direct access to Amazon S3 data lakes and Redshift data warehouses

- Studio Notebook: Collaborative environment for real-time team work

Best For:

- Organizations heavily invested in AWS ecosystem

- Teams requiring comprehensive MLOps and governance

- Enterprise applications needing scalability and security

- Data scientists who want managed infrastructure

Limitations:

- Requires stable internet connection for cloud access

- Can be expensive for extensive usage

- Vendor lock-in with AWS services

Related Reading: Discover how AI tools for e-commerce leverage cloud platforms like SageMaker.

4. OpenAI API: Enterprise-Ready AI Integration

While Hugging Face relies on community models, OpenAI offers polished, enterprise-ready APIs with military-grade encryption—ideal for handling sensitive data. OpenAI provides access to cutting-edge language models through a simple API interface.

Key Features:

- GPT Models: Access to state-of-the-art language models including GPT-4 and GPT-5

- Plug-and-Play Integration: Add AI capabilities to applications in minutes

- Enterprise Security: HIPAA/GDPR-compliant with robust data encryption

- Continuous Updates: Regular access to latest model improvements

- Realtime API: Build natural-sounding voice agents for customer experiences

Best For:

- Production applications requiring reliable NLP capabilities

- Businesses in regulated industries (healthcare, legal, finance)

- Teams wanting pre-trained models without infrastructure management

- Applications needing consistent, predictable performance

Limitations:

- Costly for startups as plans scale quickly

- Closed-source with limited customization options

- Usage-based pricing can be unpredictable

- Less control over underlying model architecture

Optimization Tools: Learn about website speed optimization to ensure your AI-powered applications perform efficiently.

5. Google Vertex AI: Unified ML Platform

Google Vertex AI is a unified ML platform designed to help companies build, deploy, and scale machine learning models faster. It provides end-to-end solutions with deep integration into Google Cloud ecosystem.

Key Features:

- Unified Workspace: Single platform for entire ML lifecycle

- AutoML: Build high-quality models with minimal machine learning expertise

- MLOps Integration: Comprehensive tools for model monitoring and management

- Pre-trained APIs: Ready-to-use models for vision, language, and structured data

- Scalability: Enterprise-grade infrastructure for handling massive workloads

Best For:

- Tech companies requiring enterprise-grade integration

- Organizations using Google Cloud Platform

- Teams needing comprehensive model monitoring

- Applications requiring consistent uptime and SLAs

Limitations:

- Complex pricing structure

- Steeper learning curve for platform-specific features

- Best suited for Google Cloud users

Developer Resources: Explore our AI tools for software engineers to maximize productivity.

6. Microsoft Azure Machine Learning Studio

Azure Machine Learning Studio stands out with its user-friendly drag-and-drop interface, making machine learning more accessible to developers and data scientists of all skill levels. This platform provides comprehensive data services and robust integration within the Microsoft ecosystem.

Key Features:

- Drag-and-Drop Interface: Visual model building for less code-intensive development

- Comprehensive Data Services: Built-in data preparation and management tools

- Azure Integration: Deep connections with Microsoft Azure services

- Enterprise Support: Dedicated support for mission-critical applications

- Hybrid Deployment: Support for cloud, on-premises, and edge deployments

Best For:

- Organizations using Microsoft Azure infrastructure

- Teams with varying levels of ML expertise

- Enterprise applications requiring strong governance

- Hybrid cloud deployments

Limitations:

- Higher setup costs compared to some alternatives

- Learning curve for Azure-specific services

- Can be overwhelming for simple use cases

Productivity Enhancement: See how AI productivity tools can streamline your development process.

7. Replicate: Hosted Model Inference Platform

Replicate offers a hosted way to serve open-source models through inference APIs, great for testing or integrating models quickly without setting up infrastructure. This platform makes machine learning accessible by simplifying model deployment and execution.

Key Features:

- One-Click Deployment: Run models without infrastructure setup

- Open-Source Models: Access diverse collection of community models

- Model Exploration: Preview results before implementation

- API-First Design: Simple REST API for easy integration

- Pay-Per-Use Pricing: Cost-effective for experimentation and small-scale projects

Best For:

- Rapid prototyping and testing

- Developers wanting to experiment with various models

- Small-scale applications without infrastructure teams

- Projects requiring quick model integration

Limitations:

- Less control over infrastructure and optimization

- May not scale well for high-volume production use

- Limited customization options

Testing Tools: Use our SEO checker tools to ensure your AI applications are discoverable.

8. BentoML: Open-Source Model Serving

BentoML is ideal for turning Hugging Face models into self-hosted REST APIs using Python, lightweight and open-source with a developer-friendly interface. This framework simplifies the transition from model development to production deployment.

Key Features:

- Model Packaging: Unified format for packaging ML models

- High Performance: Up to 100x throughput improvement over Flask-based servers

- Micro-Batching: Intelligent request batching for optimal performance

- DevOps Integration: Works seamlessly with existing infrastructure tools

- Multi-Framework Support: Compatible with TensorFlow, PyTorch, and more

Best For:

- Teams wanting self-hosted solutions

- Python-first development workflows

- Organizations requiring full infrastructure control

- Cost-conscious projects avoiding cloud vendor lock-in

Limitations:

- Requires DevOps knowledge for optimal setup

- Self-managed infrastructure responsibility

- Smaller community compared to major frameworks

Related Tools: Check out our password generator for securing your ML infrastructure.

9. Northflank: Full-Stack ML Deployment

Northflank is built for teams wanting to run Hugging Face models with full control over infrastructure, fine-tune them, deploy APIs, and run supporting services like Postgres or Redis in one place. This platform provides comprehensive infrastructure management for ML workflows.

Key Features:

- Complete Infrastructure Control: Manage models, databases, and services together

- Fine-Tuning Support: Built-in capabilities for model customization

- Multi-Service Orchestration: Run ML models alongside supporting infrastructure

- Developer Experience: Streamlined workflows for ML teams

- Flexible Deployment: Support for various cloud providers

Best For:

- Teams requiring comprehensive infrastructure management

- Organizations needing both ML and traditional services

- Projects requiring extensive model fine-tuning

- Development teams wanting streamlined workflows

Limitations:

- More complex than managed services

- Requires understanding of infrastructure concepts

- May be overkill for simple use cases

Infrastructure Tools: Explore our domain hosting checker to verify your deployment infrastructure.

10. Modal: Serverless GPU Computing

Modal is useful for running Python functions or GPU jobs in the cloud, providing a serverless approach to machine learning workloads without infrastructure management overhead.

Key Features:

- Serverless Architecture: No infrastructure management required

- GPU Access: On-demand GPU resources for training and inference

- Python-Native: Write standard Python code that scales automatically

- Cost Efficiency: Pay only for actual compute time

- Quick Setup: Minimal configuration to get started

Best For:

- Individual developers and small teams

- Intermittent ML workloads

- Experimentation and prototyping

- Teams wanting to avoid infrastructure management

Limitations:

- Less suitable for continuous, high-volume workloads

- Limited control over underlying infrastructure

- Vendor lock-in considerations

Development Resources: Learn about AI tools for Python developers to enhance your workflow.

Comparison Matrix: Key Features at a Glance

| Platform | Best For | Deployment | Learning Curve | Cost Model |

|---|---|---|---|---|

| TensorFlow | Production & Enterprise | Excellent | Steep | Open-source + Cloud costs |

| PyTorch | Research & Prototyping | Good (improving) | Moderate | Open-source + Cloud costs |

| Amazon SageMaker | AWS Integration | Excellent | Moderate | Pay-per-use |

| OpenAI API | Quick Integration | Managed | Easy | Usage-based |

| Google Vertex AI | GCP Integration | Excellent | Moderate | Pay-per-use |

| Azure ML Studio | Microsoft Ecosystem | Excellent | Moderate | Pay-per-use |

| Replicate | Rapid Testing | Managed | Easy | Pay-per-use |

| BentoML | Self-Hosted APIs | Good | Moderate | Open-source + Infrastructure |

| Northflank | Full-Stack Control | Excellent | Moderate-Steep | Subscription |

| Modal | Serverless GPU | Good | Easy | Pay-per-compute |

Making the Right Choice: Decision Framework

For Research Teams:

Choose PyTorch if you prioritize flexibility, rapid experimentation, and cutting-edge model architectures. PyTorch currently leads in research community adoption with 55% of production share in Q3 2025.

For Enterprise Applications:

Choose TensorFlow or Cloud Platforms (SageMaker, Vertex AI, Azure ML) when you need robust deployment tools, enterprise support, and production-grade infrastructure.

For Quick Integration:

Choose OpenAI API or Replicate when you want pre-trained models with minimal setup and don't need extensive customization.

For Cost Optimization:

Choose Open-Source Options (TensorFlow, PyTorch, BentoML) when you have infrastructure expertise and want to minimize ongoing costs.

For Startups:

Choose Modal or Replicate for serverless simplicity and pay-per-use pricing that scales with your growth.

Emerging Trends in ML Platforms for 2025

1. Framework Interoperability

Keras 3 might be the quiet revolution, offering one repo with three backends—swap TensorFlow for PyTorch for JAX with a config flip. This trend toward framework-agnostic tools reduces vendor lock-in.

2. Production-Ready Research Tools

The gap between research and production frameworks continues narrowing. PyTorch's improvements in deployment capabilities and TensorFlow's enhanced ease of use mean developers can use the same tools throughout the ML lifecycle.

3. Specialized Hardware Optimization

Both major frameworks now offer optimized support for GPUs, TPUs, and specialized AI accelerators, with automatic mixed precision training becoming standard.

4. MLOps Integration

Modern platforms increasingly include built-in MLOps capabilities—model versioning, experiment tracking, automated retraining, and monitoring—as core features rather than add-ons.

Stay Updated: Read about AI trends dominating 2025 to future-proof your ML strategy.

Performance Considerations

Training Performance:

PyTorch with torch.compile() can deliver 20-25% speedups with literally one line of code, while TensorFlow's XLA provides comparable 15-20% improvements for larger models.

Inference Optimization:

Both frameworks support TensorRT integration for production inference, with TensorFlow Serving maintaining its reputation as the gold standard for high-throughput serving.

Memory Efficiency:

Modern frameworks implement dynamic memory allocation and gradient checkpointing, but specific performance depends on model architecture, batch size, and hardware configuration.

Optimization Guide: Learn speed optimization techniques applicable to ML applications.

Integration and Ecosystem

Development Tools:

- TensorFlow: TensorBoard, TensorFlow Extended (TFX), TensorFlow Hub

- PyTorch: TorchServe, PyTorch Lightning, Weights & Biases integration

- Cloud Platforms: Integrated notebooks, experiment tracking, automated deployments

Community Resources:

Both TensorFlow and PyTorch maintain extensive documentation, tutorials, and active communities. Python, TensorFlow, and PyTorch are the top three most requested tools in ML job listings, making skills in either framework highly valuable.

Third-Party Libraries:

Extensive ecosystems surround both major frameworks, including specialized libraries for computer vision (torchvision, TensorFlow Object Detection), NLP (transformers, TensorFlow Text), and reinforcement learning.

Development Resources: Discover free AI tools for students to start learning.

Security and Compliance

Enterprise Requirements:

Cloud-based platforms (SageMaker, Vertex AI, Azure ML) provide built-in compliance certifications (SOC 2, HIPAA, GDPR) and enterprise security features including encryption at rest and in transit, role-based access control, and audit logging.

Self-Hosted Solutions:

Open-source frameworks (TensorFlow, PyTorch) combined with self-hosted deployment (BentoML, Northflank) provide maximum control over data and model security, ideal for sensitive applications.

API Security:

When using managed APIs (OpenAI, Replicate), review their security documentation and ensure they meet your compliance requirements.

Security Tools: Use our SSL checker to verify your ML API endpoints are secure.

Cost Analysis

Open-Source Frameworks:

TensorFlow and PyTorch are free, but you'll pay for:

- Cloud compute resources (GPUs/TPUs)

- Storage for datasets and models

- Infrastructure management (if self-hosted)

- DevOps expertise

Managed Platforms:

Cloud ML Services typically charge for:

- Training compute time (usually by the hour)

- Inference requests (per prediction or per hour)

- Storage and data transfer

- Additional features (AutoML, monitoring)

API-Based Solutions:

OpenAI and Replicate use usage-based pricing:

- Per-token for language models

- Per-request for other models

- Costs can be unpredictable at scale

- No infrastructure management costs

Budget Tools: Calculate costs with our discount calculator when comparing pricing plans.

Migration Strategies

From Hugging Face to Alternatives:

To PyTorch:

- Most Hugging Face models are PyTorch-based, making migration straightforward

- Load models using standard PyTorch APIs

- Implement custom training loops or use PyTorch Lightning

To TensorFlow:

- Convert models using ONNX or direct PyTorch-to-TensorFlow converters

- Leverage TensorFlow Hub for pre-trained alternatives

- Use TensorFlow's SavedModel format for deployment

To Cloud Platforms:

- Upload existing models to SageMaker, Vertex AI, or Azure ML

- Use platform-native training for new models

- Leverage managed endpoints for inference

Migration Tips: Check our website audit checklist adapted for ML system audits.

Best Practices for Platform Selection

1. Assess Your Requirements:

- Project scale and expected growth

- Team expertise and preferences

- Budget constraints

- Deployment environment (cloud, edge, mobile)

- Compliance requirements

2. Start Small:

- Prototype with multiple frameworks

- Test deployment workflows

- Evaluate documentation and community support

- Measure actual performance on your specific use case

3. Consider Long-Term Maintenance:

- Framework stability and update frequency

- Community activity and longevity

- Vendor lock-in implications

- Team training requirements

4. Plan for Scalability:

- Distributed training capabilities

- Multi-GPU/multi-node support

- Inference optimization options

- Cost scaling characteristics

Planning Tools: Organize your evaluation with our project management guides.

Common Pitfalls to Avoid

1. Choosing Based on Hype:

Select frameworks based on your specific needs, not industry trends. What works for large tech companies may not suit your project.

2. Ignoring Deployment:

The reality of 2025 development experience vs infrastructure vs portability are three different battles. Consider the entire pipeline from development to production.

3. Underestimating Learning Curves:

Budget time for team training. Framework expertise significantly impacts productivity and project success.

4. Overlooking Hidden Costs:

Cloud platforms' actual costs often exceed initial estimates. Monitor usage and optimize continuously.

Troubleshooting: Learn common SEO mistakes that apply to ML project management.

Future-Proofing Your ML Stack

Cross-Platform Skills:

For a well-rounded skill set, start with PyTorch and layer in TensorFlow (via Keras or TFLite) as needed. Understanding multiple frameworks provides flexibility as the landscape evolves.

Standard Formats:

Leverage ONNX for model portability across frameworks and deployment targets. This reduces vendor lock-in and enables optimization flexibility.

Modular Architecture:

Design ML pipelines with clear separation between training, inference, and application logic. This facilitates framework switching if requirements change.

Continuous Learning:

The ML landscape evolves rapidly. Stay updated through conferences, research papers, and community engagement. Both TensorFlow and PyTorch release significant updates regularly.

Continuous Improvement: Apply technical SEO secrets to your ML documentation and deployment.

Conclusion: Finding Your Perfect Match

The quest for the ideal ML platform isn't about finding the "best" framework—it's about finding the right fit for your specific needs, team, and project constraints. The best framework is the one your team actually understands and uses effectively.

Quick Selection Guide:

- Need enterprise deployment? → TensorFlow, SageMaker, or Vertex AI

- Doing cutting-edge research? → PyTorch

- Want quick API integration? → OpenAI API

- Need full infrastructure control? → BentoML or Northflank

- Starting with minimal investment? → Modal or Replicate

- Already on AWS/GCP/Azure? → Use respective cloud ML platforms

The machine learning ecosystem in 2025 offers unprecedented choice and capability. Whether you prioritize research flexibility, production stability, cost optimization, or ease of use, there's a platform designed for your workflow.

Remember: the framework that powers your ML applications matters less than your ability to deliver value to users. Start with the tool that removes barriers for your team, and don't hesitate to evolve your stack as requirements change.

Additional Resources

Boost Your ML Projects:

- Top 100 Best AI Tools - Comprehensive directory

- AI Tools for Every Profession - Role-specific recommendations

- AI Coding Assistants - Enhance development workflow

Technical Optimization:

- Image Compressor - Optimize ML training datasets

- JSON Formatter - Format API responses

- Base64 Encoder/Decoder - Handle model artifacts

Development Tools:

- Code Minifier - Optimize deployment packages

- API Testing Tools - Validate ML endpoints

- Performance Monitoring - Track inference latency

Stay Connected: Subscribe to industry newsletters, join framework-specific communities, and contribute to open-source projects. The ML field thrives on collaboration and knowledge sharing.

Ready to start? Explore our complete guide to AI tools and build something amazing today.

This guide is regularly updated to reflect the latest developments in ML platforms and frameworks.